As a team of unmanned quadrotor aircraft hovers above, six small ground robots roll into an unfamiliar two-story structure. Soon, the rotorcraft are darting about mapping the upper floor, while the ground vehicles chart the lower floor, process both teams’ data, and produce a full building plan for computers outside.

Notably absent are human beings and radio control devices. This little squadron is fully autonomous.

In robotics, autonomy involves enabling unmanned vehicles to perform complex, unpredictable tasks without human guidance. Today, in the early stages of the robotics revolution, it’s among the most critical areas of research.

“The move to true autonomy has become highly important, and progress toward that goal is happening with increasing speed,” said Henrik Christensen, executive director of the Institute for Robotics and Intelligent Machines (IRIM) at Georgia Tech and a collaborator on the mapping experiment. “It won’t happen overnight, but the day is coming when you will simply say to a swarm of robots, ‘Okay, go and perform this mission.’”

Traditionally, robotic devices have been pre-programmed to perform a set task: Think of the factory robot arm that automatically performs a repetitive function like welding. But an autonomous vehicle must be fully independent, moving – without human intervention – in the air, on the ground, or in the water. Well-known examples include the prototype self-driving vehicles currently being tested in some U.S. cities.

Vehicular autonomy requires suites of sensors, supported by advanced software and computing capabilities. Sensors can include optical devices that use digital camera technology for robotic vision or video reconnaissance; inertial motion detectors such as gyroscopes; global positioning system (GPS) functionality; radar, laser and lidar systems, pressure sensors, and more.

At Georgia Tech, researchers are developing both commercial and defense-focused technologies that support autonomous applications. This article looks at some of the Georgia Tech research teams focused on improving performance of autonomous surface, air, and marine systems.

Autonomous in Antarctica

Radio-controlled devices have been replacing humans in hazardous situations for years. Now, autonomous vehicles are starting to take on complex jobs in places where radio frequency signals don’t work.

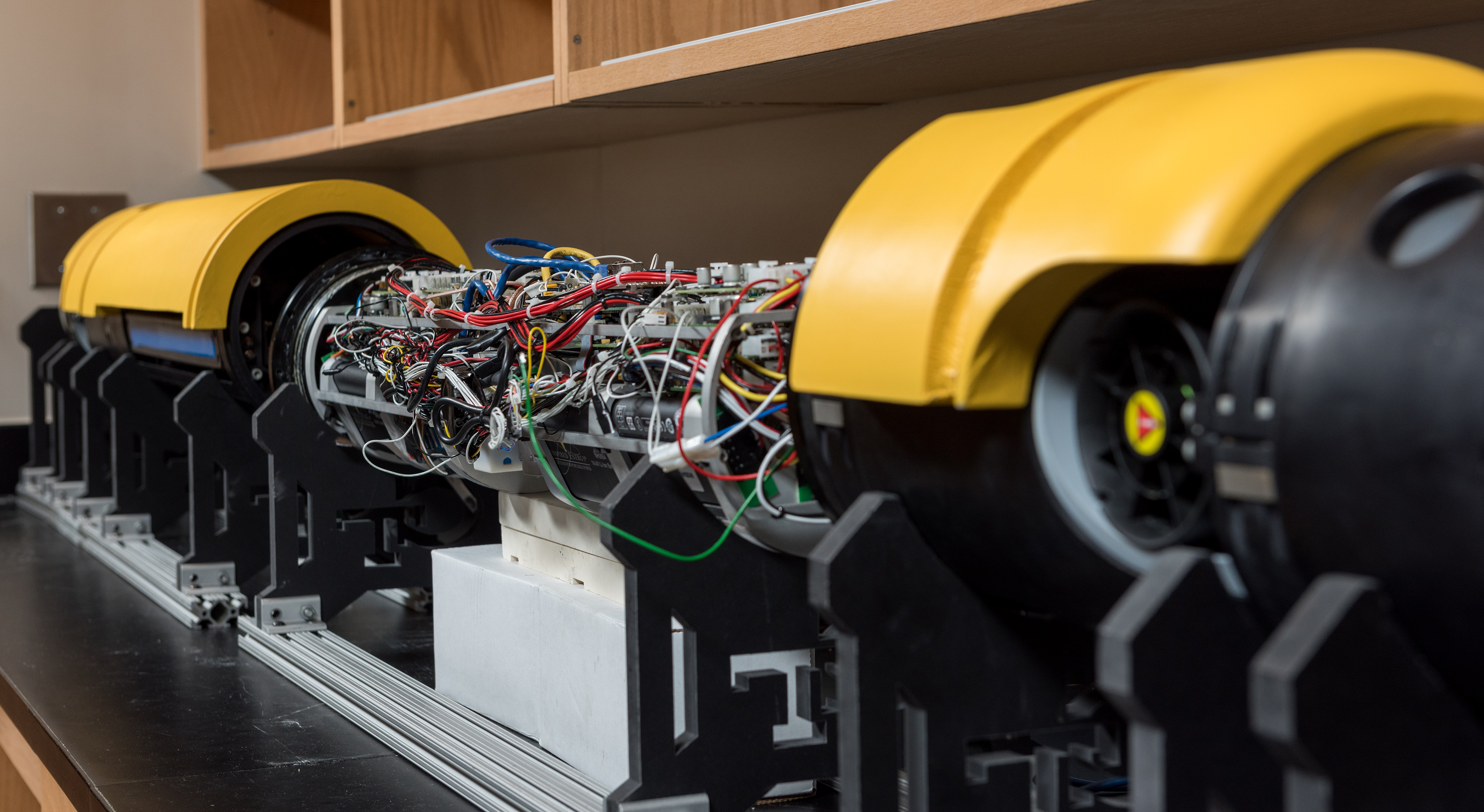

An autonomous underwater vehicle (AUV) known as Icefin has already ventured deep under the vast Ross Ice Shelf in Antarctica, simultaneously testing a unique vehicle design and gathering new information on conditions beneath the ice. Icefin was designed and built in just six months by a team led by researchers from the Georgia Tech School of Earth and Atmospheric Sciences collaborating with an engineering team from the Georgia Tech Research Institute (GTRI).

“GPS signals are blocked by water and ice under the Ross Ice Shelf, and, at the same time, the water is quite murky, making navigation with optical devices a challenge,” explained Mick West, a principal research engineer who led the GTRI development team and participated in the recent Icefin mission in Antarctica. “In some ways, this kind of marine application is really helping to push robotic autonomy forward, because in an underwater environment unmanned devices are completely on their own.”

The research team used tools suited to underwater navigation including Doppler velocity detection; sensors that perform advanced sonar imaging; and conductivity, temperature, and depth (CTD) readings. They also added inertial navigation capability in the form of a fiber optic gyroscope.

The sensors were supported by a software-based technique known as simultaneous localization and mapping (SLAM) – computer algorithms that help an autonomous vehicle map an area while simultaneously keeping track of its position in that environment. Using this approach, Icefin could check its exact position at the surface via GPS, and then track its new locations relative to that initial reference point as it moved under the ice. Alternatively, it could navigate using local features such as unique ice formations.

Icefin successfully ventured down to 500 meters under the Ross Ice Shelf and found that the frigid waters were teeming with life. On this expedition, data was sent back from under the ice via a robust fiber-optic tether; novel technologies to transmit information wirelessly from under the water are being studied.

West’s GTRI team was part of a team led by Britney Schmidt, an assistant professor in the Georgia Tech School of Earth and Atmospheric Sciences and principal investigator on the Icefin project. Technologies developed for Icefin could someday help search for life in places like Europa, Jupiter’s fourth-largest moon, which is thought to have oceans similar to Antarctica’s.

“We’re advancing hypotheses that we need for Europa and understanding ocean systems here better,” Schmidt said. “We’re also developing and getting comfortable with technologies that make polar science – and eventually Europa science – more realistic.”

Real-World Performance

Autonomous technologies are generally developed in laboratories. But they must be tested, modified, and retested in more challenging environments.

Cédric Pradalier, an associate professor at the Georgia Tech-Lorraine campus in Metz, France, is working with aquatic vehicles to achieve exacting autonomous performance.

“I’m an applied roboticist. I bring together existing technologies and test them in the field,” Pradalier said. “Autonomy really becomes useful when it is precise and repeatable, and a complicated real-world environment is the place to develop those qualities.”

Pradalier is working with aquatic robots, including a 4-foot long Kingfisher unmanned surface vessel modified with additional sensors. His current research aim is to refine the autonomous vehicle’s ability to closely monitor the shore of a lake. Using video technology, the craft surveys the water’s edge while maintaining an exact distance from the shore at a consistent speed.

As currently configured, the boat performs a complex mission involving the taking of overlapping photos of the lake’s periphery. It can autonomously stop or move to other areas of the lake as needed, matching and aligning sections of the shore as they change seasonally.

Applications for such technology could include a variety of surveillance missions, as well as industrial uses such as monitoring waterways for pollution or environmental damage.

One of the advantages of autonomy for mobile applications is that a robot never gets tired of precisely executing a task, Pradalier said.

“It would be very tedious, even demanding, for a human to drive the boat at a constant distance from the shore for many hours,” he said. “Eventually, the person would get tired and start making mistakes, but if the robot is properly programmed and maintained, it can continue for as long as needed.”

Ocean-Going Software

An autonomous underwater vehicle (AUV) faces unknown and unpredictable environments. It relies on software algorithms that interact with sensors to address complex situations and adapt to unexpected events.

Fumin Zhang, an associate professor in the Georgia Tech School of Electrical and Computer Engineering (ECE), develops software that supports autonomy for vehicles that delve deep into the ocean gathering data. His work is supported by the Office of Naval Research and the National Science Foundation.

“Underwater vehicles often spend weeks in an ocean environment,” Zhang said. “Our software modules automate their operation so that oceanographers can focus on the science and avoid the stress of manually controlling a vehicle.”

The ocean is a challenging and unpredictable environment, he explained, with strong currents and even damaging encounters with sea life. Those who study underwater autonomy must plan for both expected conditions and unexpected events.

Among other things, the team is using biologically related techniques, inspired by the behavior of sea creatures, to enhance autonomous capabilities.

Zhang has also devised an algorithm that analyzes collected data and automatically builds a map of what underwater vehicles see. The resulting information helps oceanographers better understand natural phenomena.

In 2011, a Zhang student team designed and built an AUV from scratch. Working with Louisiana State University, the team used the craft to survey the Gulf of Mexico and assess underwater conditions after a massive oil spill off the U.S. coastline.

Among other novel AUVs developed by Zhang’s team is one constructed entirely of transparent materials. The design is aimed at testing optical communications underwater.

To facilitate underwater testing of AUVs, Zhang and his team have developed a method in which autonomous blimps substitute for underwater vehicles for research purposes. The blimps are flown in a large room, lessening the time needed to work in research pools.

“The aerodynamics of blimps have many similarities to the conditions encountered by underwater vehicles,” Zhang said. “This is an exciting development, and we are going full speed ahead on this project.”

Undergraduates Tackle Autonomy

At the Aerospace Systems Design Laboratory (ASDL), numerous Georgia Tech undergraduates are collaborating with professors, research faculty, and graduate students on autonomous vehicle development for sponsors that include the Naval Sea Systems Command (NAVSEA) and the Office of Naval Research (ONR).

For instance, student teams, working with the Navy Engineering Education Center (NEEC), are helping design ways to enable autonomous marine vehicles for naval-surface applications.

This year, the researchers plan to work on ways to utilize past ASDL discoveries in the areas of autonomy algorithms and the modeling of radio frequency behavior in marine environments. The aim is to exploit those technologies in a full-size robotic boat, enabling it to navigate around obstacles, avoid other vehicles, find correct landing areas, and locate sonar pingers like those used to identify downed aircraft.

Among other things, they are working on pathfinding algorithms that can handle situations where radio signals are hampered by the humid conditions found at the water’s surface. They’re developing sophisticated code to help marine networks maintain communications despite rapidly shifting ambient conditions.

In addition to the NEEC research, ASDL undergraduates regularly compete against other student teams in international autonomous watercraft competitions such as RobotX and RoboBoat.

“The tasks in these competitions are very challenging,” said Daniel Cooksey, a research engineer in ASDL. “The performance achieved by both Georgia Tech and the other student groups is really impressive.”

These competitions have inspired other spin-off undergraduate research efforts. In one project, an undergraduate team is developing autonomous capabilities for a full-size surface craft. The resulting vehicle could be used for reconnaissance and other missions, especially at night or in low-visibility conditions.

“Basically, we study the ways in which adding autonomy changes how a vehicle is designed and used,” said Cooksey. “We’re working to achieve on the water’s surface some of the performance that’s being developed for automobiles on land.”

ASDL, which is part of Georgia Tech’s Daniel Guggenheim School of Aerospace Engineering, is directed by Regents Professor Dimitri Mavris.

Diverse Robots Team Up

A major goal of today’s autonomous research involves different robots cooperating on complex missions. As part of the Micro Autonomous Systems and Technology (MAST) effort, an extensive development program involving 18 universities and companies, Georgia Tech has partnered with the University of Pennsylvania and the Army Research Laboratory (ARL) on developing heterogeneous robotic groups. The work is sponsored by the ARL.

In the partners’ most recent joint experiment at a Military Operations on Urban Terrain (MOUT) site, a team of six small unmanned ground vehicles and three unmanned aerial vehicles autonomously mapped an entire building. Georgia Tech researchers, directed by Professor Henrik Christensen of the Georgia Tech School of Interactive Computing, developed the mapping and exploration system as well as the ground vehicles’ autonomous navigation capability. The University of Pennsylvania team provided the aerial autonomy, and ARL handled final data integration, performance verification, and mapping improvements.

“We were able to successfully map an entire two-story structure that our unmanned vehicles had never encountered before,” said Christensen. “The ground vehicles drove in and scanned the bottom floor, and the air vehicles scanned the upper floor, and they came up with a combined model for what the whole building looks like.”

The experiment used the Omnimapper program, developed by Georgia Tech, for exploration and mapping. It employs a system of plug-in devices that handle multiple types of 2-D and 3-D measurements, including rangefinders, RGB-F computer vision devices, and other sensors. Graduate student Carlos Nieto of the School of Electrical and Computer Engineering (ECE) helped lead the Georgia Tech team participating in the experiment.

The research partners tested different exploration approaches. In the “reserve” technique, robots not yet allocated to the scanning mission remained at the starting locations until new exploration goals cropped up. When a branching point was detected by an active robot, the closest reserve robot was recruited to explore the other path.

In the “divide and conquer” technique, the entire robot group followed the leader until a branching point was detected. Then the group split in half, with one robot squad following the original leader while a second group followed their own newly designated leader.

In other work, mobile robots’ ability to search and communicate is being focused on ways that would promote human safety during crisis situations. Technology that can locate people in an emergency and guide them to safety is being studied by a team that includes GTRI research engineer Alan Wagner, ECE Professor Ayanna Howard, and ECE graduate student Paul Robinette.

Dubbed the rescue robot, this technology is aimed at locating people in a low-visibility situation such as a fire. Current work is concentrated on optimizing how the rescue robot interacts with humans during a dangerous and stressful situation.

When development is complete, the robot could autonomously find people and guide them to safety, and then return to look for stragglers. If it senses an unconscious person, it would summon help wirelessly and guide human or even robotic rescuers to its location.

Controlling Robot Swarms

Magnus Egerstedt, Schlumberger Professor in the Georgia Tech School of Electrical and Computer Engineering (ECE), is focused on cutting-edge methods for controlling air and ground robotic vehicles. He’s investigating how to make large numbers of autonomous robots function together effectively, and how to enable them to work harmoniously – and safely – with people.

In one project for the Air Force Office of Scientific Research, Egerstedt is investigating the best methods for enabling autonomous robots to differentiate among interactions with other robots and humans. Another important issue: getting robots to organize themselves so they’re easier for a person to control.

“Think about standing in a swarm of a million mosquito-sized robots and getting them to go somewhere or to execute a particular task,” he said. “It’s clear that somehow I need to affect the entire flow, rather than trying to manipulate individuals. My research shows that the use of human gestures is effective for this, in ways that can resemble how a conductor guides an orchestra.”

Egerstedt is using graph theory – mathematical structures used to model relations between objects – and systems theory to design multi-agent networks that respond to human prompts or similar control measures. He’s also concerned with preventing malicious takeover of the robotic swarm. To address this issue, he’s programming individual robots to recognize and reject any command that could lead to unacceptable swarm behavior.

One of Egerstedt’s major goals involves establishing an open access, multi-robot test bed at Georgia Tech where U.S. roboticists could run safety and security experiments on any autonomous system.

Developing Aerial Autonomy

At Georgia Tech’s Daniel Guggenheim School of Aerospace Engineering (AE), faculty-student teams are involved in a wide range of projects involving autonomous aerial vehicles.

Eric Johnson, who is Lockheed Martin Associate Professor of Avionics Integration, pursues ongoing research efforts in fields from collaborative mapping to autonomous load handling. Sponsors include Sikorsky Aircraft, the Defense Advanced Research Projects Agency (DARPA), National Aeronautics and Space Administration (NASA), and the National Institute of Standards and Technology (NIST).

“An early major research effort in aerial robotics was the DARPA Software Enabled Control Program, which used autonomy to support vertical takeoff and landing of unmanned aircraft,” Johnson said. “Many of our subsequent projects have been built on the foundation started at that time.”

Johnson and his student team are pursuing multiple projects in areas that include:

- Vision-aided inertial navigation – This technology uses cameras, accelerometers, and gyroscopes to allow an autonomous aerial vehicle to navigate in conditions where GPS information isn’t available. Such situations include navigating inside buildings, flying between buildings, and operating when satellite signals are jammed or spoofed – falsified – by hostile forces.

- Sling load control – Delivering large loads suspended underneath an aircraft is tricky even for manned rotorcraft; autonomous vehicles can encounter major stability issues during such missions. Johnson and his team are tackling these load control challenges, and are even working on a project that involves an autonomous aircraft delivering a load to a vehicle that’s also moving.

- Collaborative autonomy – Johnson and his team recently demonstrated a collaborative mapping application that lets two autonomous rotorcraft use onboard laser scanners to cooperatively map an urban area. In a flight experiment at Fort Benning, Georgia, the two aircraft succeeded in not only sharing the mapping chores, but were also able to warn each other of hostile threats nearby.

- Fault tolerance control – These techniques allow aircraft to autonomously recover from major unanticipated failures and continue flying. In one demonstration, Johnson and his team enabled an unmanned aircraft to remain aloft after losing half of a wing.

- The Georgia Department of Transportation – Johnson and Javier Irizarry, associate professor in the School of Building Construction, are studying ways in which the state of Georgia might use unmanned vehicles to perform routine functions such as inspecting bridges and monitoring regulatory compliance in construction.

Meanwhile, AE student teams have placed strongly in recent competitions involving autonomous aerial vehicles, noted Daniel Schrage, an AE professor who is also involved in autonomy research. They have secured first place finishes in:

- The American Helicopter Society International 3rd Annual Micro Air Vehicle Student Challenge. Directed by Johnson, the Georgia Tech team reproduced the functionality of a 200-pound helicopter in a tiny 1.1-pound autonomous rotorcraft, which accurately completed the competition’s required tasks.

- The American Helicopter Society International 32nd Student Design Competition. Along with a team from Middle East Technical University (METU), the Georgia Tech undergraduate team developed an autonomous rotorcraft capable of delivering 5,000 packages a day within a 50-square-mile area.

Bio-Inspired Autonomy

Certain self-guiding aerial robots could someday resemble insects in multiple ways. Professor Frank Dellaert of the School of Interactive Computing and his team are taking inspiration from the world of flying creatures as they develop aerial robots.

The research involves mapping large areas using two or more small unmanned rotorcraft. These autonomous aircraft can collaboratively build a complete map of a location, despite taking off from widely separated locations and scanning different parts of the area.

This effort includes Dellaert’s team and a team directed by Nathan Michael, an assistant research professor at Carnegie Mellon University. The work is part of Micro Autonomous Systems and Technology (MAST), an extensive Army Research Laboratory program in which Georgia Tech and Carnegie Mellon are participants.

“This work has a number of bio-inspired aspects,” Dellaert said. “It supports the development of future autonomous aerial vehicles that would have size and certain capabilities similar to insects and could perform complex reconnaissance missions.”

Called Distributed Real-Time Cooperative Localization and Mapping, the project’s technology uses a planar laser rangefinder that rotates a laser beam almost 180 degrees and instantaneously provides a 2-D map of the area that’s closest to a vehicle. As the quadrotor flies, it collaborates by radio with its partner vehicles to build an image of an entire location, even exchanging the laser scans themselves to co-operatively develop a comprehensive map.

This work can be performed outdoors – and indoors where receiving GPS signals is a problem. Using built-in navigational capability, the collaborating vehicles can maneuver autonomously with respect to each other, while also compensating for the differences between their takeoff points so they can create an accurate map. This performance was made possible by novel algorithms developed by the two teams and by Vadim Indelman, an assistant professor at Technion - Israel Institute of Technology.